Abstract

This article addresses recurrent challenges in applied quantitative computational project development, statistics and econometrics, particularly in collaborative environments; as encoding mismatches and inconsistent date-time conventions). The foundations of character encoding,

the role of Oracle’s National Language Support (NLS) parameters, system locales, and

best practices for mitigating such problems are examines. Drawing on applied computational experience, the article situates these

issues within broader principles of reproducible research and data

integrity. Empirical examples of R and Python integration with Oracle

databases are used to illustrate problems and propose solutions.

1. Introduction

Numerical precision and robust data management are fundamental in quantitative computing and research, such as modern econometrics. Implied that integration of statistical software and programming languages with relational databases has become a cornerstone. These implied some challenge that are heightened when working with multilingual contexts, regulatory reporting and international datasets, where consistency in encoding and date–time conventions is essential.

A frequent source of difficulty arises when the database management

system (DBMS), client libraries, and statistical environments employ

inconsistent encodings or date–time formats. Such inconsistencies can

undermine data integrity and erode confidence in results.

This article addresses such challenges, focusing on empirical cases of C++, R and Python integration with Oracle databases. Draws upon applied experience and literature review to illustrate common challenges and their best practice resolutions.

2. Theoretical Background

2.1 Encoding

Encoding specifies the mapping between binary sequences and human-readable text. UTF-8, standardized by the Unicode Consortium, supports the full range of Unicode characters (The Unicode Consortium,

2024). By contrast, others formats such as Windows-1252 (CP1252) is a single-byte encoding

widely used in Western European contexts.

A mismatch occurs when data written

under one encoding is interpreted using another, often resulting in

corrupted characters or unreadable outputs. For example, bytes intended

as UTF-8 may be misinterpreted under CP1252, producing nonsensical

characters or escaped sequences.

2.2 Oracle’s NLS Parameters

In Oracle databases, the National Language Support (NLS) system governs linguistic and encoding behavior. Key parameters include:

-

NLS_CHARACTERSET: Encoding for CHAR and VARCHAR2 columns.

-

NLS_NCHAR_CHARACTERSET: Encoding for NCHAR and NVARCHAR2 types (often AL16UTF16).

-

NLS_LANG: Client-side declaration of expected encoding.

When NLS_LANG diverges from the database’s character set, Oracle may perform implicit conversions, leading to corrupted text.

2.3 Date and Time

Date and time handling constitutes another recurrent source of errors. Adopting the ISO 8601 format (YYYY-MM-DD HH:MM:SS) is a widely recognized best practice, that minimizes ambiguity. Inconsistent handling across systems often results in errors that remain undetected until late stages of analysis.

2.4 R Locales and Python Configuration

R relies on system locale settings (e.g., LC_CTYPE) to determine string encoding and conversion. Programmatic adjustment is possible via Sys.setlocale(), although must be carefully scoped, as improper use may alter unrelated behaviors such as numeric formatting.

Python exhibits similar sensitivities with similar system configurations, and often requires explicit encoding declarations.

3. Case Studies

3.1 Encoding Mismatches in R–Oracle Integration

In a representative case, an Oracle database was configured with:

Meanwhile, the R client declared NLS_LANG = AMERICAN_AMERICA.AL32UTF8. This misalignment caused Oracle to deliver UTF-8 bytes that R printed as escaped octal sequences. This misalignment can disrupt the reproducibility and required post-hoc decoding.

3.2 Reproducibility Across Heterogeneous Systems

Collaborative projects often encounter reproducibility issues when code developed on one machine fails on another due to divergent locale configurations, DBMS parameters, or operating system updates. Although the source code remains identical, execution errors manifest in data insertion and transformation tasks.

4. Best Practices

Based on theoretical and applied insights, the following best practices are recommended:

-

Encoding alignment: Ensure NLS_LANG matches the database NLS_CHARACTERSET.

-

Programmatic locale management: Apply Sys.setlocale("LC_CTYPE", ...) at runtime in R; avoid persistent system-level changes.

-

ISO compliance for date–time: Define session-level formats (e.g., YYYY-MM-DD HH24:MI:SS) to ensure cross-platform consistency.

-

Normalization of legacy data: Use tools such as iconv() to correct pre-existing corruption.

-

UTF-8 for scripts: Standardize all codebases in UTF-8 to improve portability.

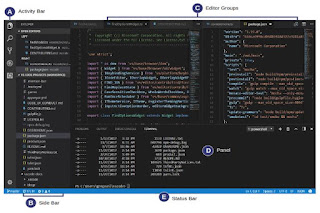

Ilustrative code:

C++

// g++ -std=c++17 -lodbc -o demo_odbc_utf8 demo_odbc_utf8.cpp

#include <sqlext.h>

#include <string>

#include <iostream>

#include <vector>

// Simple UTF-8 -> UTF-16 converter for demo (use ICU in production)

#include <codecvt>

#include <locale>

static std::u16string to_utf16(const std::string& s) {

std::wstring_convert<std::codecvt_utf8_utf16<char16_t>, char16_t> conv;

return conv.from_bytes(s);

}

static std::u16string u16(const char* s) { return to_utf16(std::string(s)); }

#define CHECK_RC(rc, handle, type, msg) \

if ((rc) != SQL_SUCCESS && (rc) != SQL_SUCCESS_WITH_INFO) { \

SQLWCHAR state[6]; SQLINTEGER native; SQLWCHAR text[256]; SQLSMALLINT len; \

SQLGetDiagRecW(type, handle, 1, state, &native, text, 255, &len); \

std::wcerr << L"[ODBC] " << msg << L" SQLSTATE=" << (wchar_t*)state \

<< L" MSG=" << (wchar_t*)text << std::endl; \

exit(1); \

}

int main() {

SQLHENV henv = SQL_NULL_HENV;

SQLHDBC hdbc = SQL_NULL_HDBC;

SQLHSTMT hstmt = SQL_NULL_HSTMT;

// 1) Allocate environment & set ODBC version

SQLRETURN rc = SQLAllocHandle(SQL_HANDLE_ENV, SQL_NULL_HANDLE, &henv);

CHECK_RC(rc, henv, SQL_HANDLE_ENV, L"Alloc ENV failed");

rc = SQLSetEnvAttr(henv, SQL_ATTR_ODBC_VERSION, (SQLPOINTER)SQL_OV_ODBC3_80, 0);

CHECK_RC(rc, henv, SQL_HANDLE_ENV, L"Set ODBC version failed");

// 2) Allocate connection and connect using wide function

rc = SQLAllocHandle(SQL_HANDLE_DBC, henv, &hdbc);

CHECK_RC(rc, hdbc, SQL_HANDLE_DBC, L"Alloc DBC failed");

// Connection string: adjust DRIVER/DBQ to your system

std::u16string conn_str =

u16("DRIVER={Oracle in OraClient19Home1};"

"DBQ=HOST:PORT/SERVICE;"

"UID=USER_NAME;PWD=SECRET;"

"StatementCacheSize=0;");

SQLWCHAR out[512];

SQLSMALLINT outLen = 0;

rc = SQLDriverConnectW(

hdbc, NULL,

(SQLWCHAR*)conn_str.c_str(), SQL_NTS,

out, 512, &outLen,

SQL_DRIVER_NOPROMPT

);

CHECK_RC(rc, hdbc, SQL_HANDLE_DBC, L"Connect failed");

// 3) Create statement

rc = SQLAllocHandle(SQL_HANDLE_STMT, hdbc, &hstmt);

CHECK_RC(rc, hstmt, SQL_HANDLE_STMT, L"Alloc STMT failed");

// 4) Normalize session: ISO formats + UTC

auto execW = [&](const std::u16string& sql){

rc = SQLExecDirectW(hstmt, (SQLWCHAR*)sql.c_str(), SQL_NTS);

CHECK_RC(rc, hstmt, SQL_HANDLE_STMT, L"Exec failed");

SQLFreeStmt(hstmt, SQL_CLOSE);

};

execW(u16("ALTER SESSION SET NLS_NUMERIC_CHARACTERS = '.,'"));

execW(u16("ALTER SESSION SET NLS_DATE_FORMAT = 'YYYY-MM-DD\"T\"HH24:MI:SS'"));

execW(u16("ALTER SESSION SET NLS_TIMESTAMP_TZ_FORMAT = 'YYYY-MM-DD\"T\"HH24:MI:SS.FF TZH:TZM'"));

execW(u16("ALTER SESSION SET TIME_ZONE = 'UTC'"));

// 5) Idempotent table

execW(u16(

"BEGIN "

" EXECUTE IMMEDIATE 'CREATE TABLE demo_utf8_ts ("

" id NUMBER GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,"

" name_utf8 VARCHAR2(200),"

" created_at TIMESTAMP WITH TIME ZONE"

" )'; "

"EXCEPTION WHEN OTHERS THEN IF SQLCODE != -955 THEN RAISE; END IF; "

"END;"));

// 6) Insert Unicode payload with wide parameter

std::u16string name = u16("José François – análise");

std::u16string insert_sql = u16(

"INSERT INTO demo_utf8_ts (name_utf8, created_at) "

"VALUES (?, SYSTIMESTAMP AT TIME ZONE 'UTC')"

);

rc = SQLPrepareW(hstmt, (SQLWCHAR*)insert_sql.c_str(), SQL_NTS);

CHECK_RC(rc, hstmt, SQL_HANDLE_STMT, L"Prepare insert failed");

// Bind parameter #1 as NVARCHAR (WCHAR at C level)

SQLUSMALLINT param = 1;

rc = SQLBindParameter(

hstmt, param, SQL_PARAM_INPUT, SQL_C_WCHAR, SQL_WVARCHAR,

name.size(), 0, (SQLPOINTER)name.c_str(), name.size()*sizeof(char16_t), NULL

);

CHECK_RC(rc, hstmt, SQL_HANDLE_STMT, L"Bind param failed");

rc = SQLExecute(hstmt);

CHECK_RC(rc, hstmt, SQL_HANDLE_STMT, L"Execute insert failed");

SQLFreeStmt(hstmt, SQL_CLOSE);

// 7) Select and print last row

execW(u16("SELECT * FROM demo_utf8_ts ORDER BY id DESC FETCH FIRST 1 ROWS ONLY"));

// For brevity, we don’t fetch columns here; in production,

// use SQLBindCol with SQL_C_WCHAR for text and SQL_C_TYPE_TIMESTAMP for timestamps.

// Cleanup

SQLFreeHandle(SQL_HANDLE_STMT, hstmt);

SQLDisconnect(hdbc);

SQLFreeHandle(SQL_HANDLE_DBC, hdbc);

SQLFreeHandle(SQL_HANDLE_ENV, henv);

std::wcout << L"Completed without encoding/timezone errors.\n";

return 0;

}

R

library(RODBC)

library(keyring)

# Secure credential retrieval

pwd <- keyring::key_get("db_name", "user_name")

dsn <- "dsn_name"

uid <- "user_name"

conn <- odbcDriverConnect(

paste0("DSN=", dsn, ";UID=", uid, ";PWD=", pwd),

believeNRows = FALSE

)

# Verify encoding in data base

sqlQuery(conn, "SELECT parameter, value

FROM nls_database_parameters

WHERE parameter IN ('NLS_CHARACTERSET','NLS_NCHAR_CHARACTERSET')")

# ALING WITH THE DATA BASE ENCODING

# Runtime locale

try(Sys.setlocale("LC_CTYPE", "en_US.UTF-8"), silent = TRUE)

# Align with Oracle DB encoding

Sys.setenv(NLS_LANG = "AMERICAN_AMERICA.WE8MSWIN1252")

# Define session-level settings

invisible(sqlQuery(conn, "ALTER SESSION SET NLS_NUMERIC_CHARACTERS = '.,'"))

invisible(sqlQuery(conn, "ALTER SESSION SET NLS_DATE_FORMAT = 'YYYY-MM-DD HH24:MI:SS'"))

df <- sqlQuery(conn, "SELECT * FROM my_table", stringsAsFactors = FALSE)

odbcClose(conn)

Python

# Python 3.11+

# pip install oracledb pandas

import oracledb

import pandas as pd

from datetime import datetime, timezone

# 1) Use UTF-8 end-to-end (client + network)

# oracledb supports explicit encodings:

conn = oracledb.connect(

user="USER_NAME",

password="SECRET",

dsn="HOST:PORT/SERVICE",

encoding="UTF-8", # client-side string encoding

nencoding="UTF-8" # national character set encoding

)

with conn.cursor() as cur:

# 2) Normalize session: numeric separators, ISO date format, UTC

cur.execute("ALTER SESSION SET NLS_NUMERIC_CHARACTERS = '.,'")

cur.execute("ALTER SESSION SET NLS_DATE_FORMAT = 'YYYY-MM-DD\"T\"HH24:MI:SS'")

cur.execute("ALTER SESSION SET NLS_TIMESTAMP_TZ_FORMAT = 'YYYY-MM-DD\"T\"HH24:MI:SS.FF TZH:TZM'")

cur.execute("ALTER SESSION SET TIME_ZONE = 'UTC'") # enforce UTC at session level

# 3) Create test table (idempotent)

cur.execute("""

BEGIN

EXECUTE IMMEDIATE '

CREATE TABLE demo_utf8_ts (

id NUMBER GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,

name_utf8 VARCHAR2(200),

created_at TIMESTAMP WITH TIME ZONE

)';

EXCEPTION

WHEN OTHERS THEN

IF SQLCODE != -955 THEN RAISE; END IF; -- -955: name is already used by an existing object

END;

""")

# 4) Round-trip insert with diacritics + tz-aware timestamp

name = "José François – análisis"

created = datetime.now(timezone.utc) # explicit UTC

cur.execute(

"INSERT INTO demo_utf8_ts (name_utf8, created_at) VALUES (:1, :2)",

[name, created]

)

conn.commit()

# 5) Retrieve as DataFrame

df = pd.read_sql("SELECT id, name_utf8, created_at FROM demo_utf8_ts ORDER BY id DESC FETCH FIRST 5 ROWS ONLY", conn)

print(df)

# Expect clean Unicode and UTC timestamps like: 2025-09-08T23:12:34.123456+00:00

5. Conclusion

Encoding and date–time inconsistencies represent a critical yet often underestimated source of error in quantitative computing environments. Misalignments between database character sets, client declarations, and statistical software locales can produce corrupted data, undermine reproducibility, and waste significant resources.

The adoption of best practices—aligning encodings, programmatically managing locales, enforcing ISO date–time standards, and saving scripts in UTF-8—enhances both the reliability and the reproducibility of computational research. These practices are essential in collaborative, cross-platform projects in applied statistics, econometrics, and quantitative finance.

6. Reference

Oracle Corporation. (2023). Oracle Database Globalization Support Guide. Redwood Shores, CA: Oracle Press.

The Unicode Consortium. (2024). The Unicode Standard, Version 15.1. Mountain View, CA: Unicode, Inc.

R Core Team. (2024). R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing.